Introduction

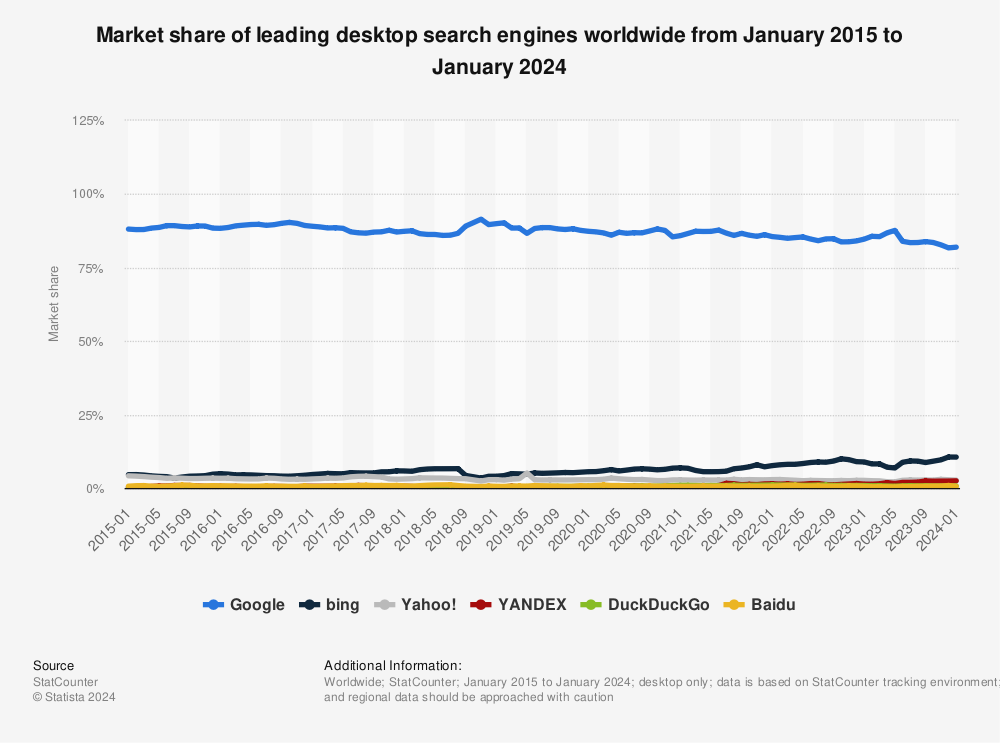

PageRank, the Algorithm is infamous for being Google's original Webpage ranking Algorithm, of course, Google has made huge changes to this algorithm, but the core of it is still very useful to dive into since Google has swept away the market of search engines surpassing and dominating other search engines completely.

PageRank is named after both the term "web page" and co-founder Larry Page. PageRank is simply a way of measuring the importance of website pages.

Random surfer

In the Pagerank algorithm, the web graph is represented as a matrix, known as the transition matrix. This matrix represents the probability of moving from one page to another by following the links on the web. The transition matrix is a square matrix, where each row and column represents a page, and the value in each cell represents the probability of moving from the page in the corresponding row to the page in the corresponding column.

The Pagerank algorithm is based on the concept of a "random surfer", which is a hypothetical web user who starts on a random page and clicks on links randomly, without any bias or preference. The idea behind the random surfer is that the popularity of a page is directly proportional to the probability of a random surfer landing on it. In the Pagerank algorithm, the random surfer is represented by the damping factor "d". The damping factor is typically set to 0.85, which means that there is an 85% chance that the random surfer will follow a link on the current page, and a 15% chance that the surfer will jump to a random page on the web according to the following formula:

PR(A) = (1-d)/N + d * (PR(T1)/C(T1) + ... + PR(Tn)/C(Tn))

The damping factor ensures that the Pagerank scores of all pages converge to a stable solution, even if the web graph contains dead-end pages (pages with no outbound links) or spider traps (pages with infinite loops of links). let us imagine a scenario where: Page A: Links to B Page B: Links to A

If we start with an initial Pagerank score of 1/N for both pages, where N is the total number of pages, then after the first iteration, the Pagerank score of each page will be: PR(A) = (1-d)/N + d PR(B)/1 = 0.075 + 0.85 1/N PR(B) = (1-d)/N + d PR(A)/1 = 0.075 + 0.85 1/N

In the second iteration, the Pagerank scores will be: PR(A) = (1-d)/N + d PR(B)/1 = 0.075 + 0.85 (0.075 + 0.85 1/N) PR(B) = (1-d)/N + d PR(A)/1 = 0.075 + 0.85 (0.075 + 0.85 1/N)

And so on, until the Pagerank scores converge to a stable solution. This state of stability or convergence is called stationary distribution. So how would we exactly represent PageRank's link analysis from instability to convergence? That is where Markov Chain comes in.

Markov Chain

A Markov chain or Markov process is a stochastic model describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event. Informally, this may be thought of as "What happens next depends only on the state of affairs now. And Since a Markov Chain is defined by an initial distribution and a transition matrix, it is the best that can represent the distributive values among links among each other.

Eigenvectors and Eigenvalues

We said that we can have that state of stationary distribution by iterating till convergence to a certain threshold(we call it Alpha). There is another way of reaching this state, which is through eigenvectors and eigenvalues.

Eigenvectors and eigenvalues play a crucial role in the Pagerank algorithm, as they are used to calculate the Pagerank scores of web pages too. The Pagerank scores can be calculated using the eigenvector of the transition matrix corresponding to its largest eigenvalue. The eigenvector represents the relative importance of each page in the web graph, and the eigenvalue represents the scaling factor for the eigenvector. Mathematically, the Pagerank scores can be calculated using the following formula: v = Av Where:

v is the eigenvector corresponding to the largest eigenvalue of the transition matrix A

A is the transition matrix

The equation represents an eigenvalue equation, where v is the eigenvector and Av is the matrix multiplication of A and v.

The Pagerank scores can be calculated by normalizing the eigenvector v such that the sum of its elements is 1. The Pagerank score of each page is then proportional to the value of its corresponding element in the normalized eigenvector. In practice, the transition matrix is often very large and sparse, and computing the eigenvector corresponding to its largest eigenvalue can be computationally expensive. To overcome this, We can use iterative methods instead. Here is an implementation for PageRank Algorithm in Python, using the iterative method:

import numpy as np

def calculate_page_rank(M, r0, epsilon):

num_iteration = 0

do_loop = True

rk1 = np.dot(M, r0)

print(f"iteration r{num_iteration} = " + np.array2string(rk1, precision=2, separator=',', suppress_small=True))

while do_loop:

num_iteration += 1

rk0 = rk1

rk1 = np.dot(M, rk1)

print(f"Iteration r{num_iteration} = " + np.array2string(rk1, precision=2, separator=',', suppress_small=True))

do_loop = not (np.linalg.norm((rk1 - rk0), ord=1) < epsilon)

return rk1

matrix_size = 5

M = np.array(

[

[0, 0, 0, 1, 1 / 3],

[1, 0, 0, 0, 1 / 3],

[0, 1, 0, 0, 0],

[0, 0, 1 / 2, 0, 1 / 3],

[0, 0, 1 / 2, 0, 0]

]

)

r0 = np.array([1 / 5, 1 / 5, 1 / 5, 1 / 5, 1 / 5]).transpose()

epsilon = 0.1

pagerank_result = calculate_page_rank(M, r0, epsilon)

Conclusion

PageRank algorithm has had a significant impact on the field of search engine optimization and has helped to improve the relevance and accuracy of search results.